Part 1: Creating Index, Index Operations and Best Practices.

- Adding data to Elastic search, we need an index( a place to store related data).

- In reality, an index is just a logical namespace that points to one or more physical shards.

- Index building with proper schema structure will play important role in Elastic Search all the operation’s related to data are performed through index. It is obvious that we can store data in index.

- So to build such type of good indices we need to consider some scenarios before building your indices.

- Once an index is built, we can search with full text search and term search etc.

- Following are the concepts which we need to consider while building index or indices.

Index Creation

Creating index

- Index Anatomy

- Insert data in to index

- Update data in to index

- Delete index

- Best Practices to create index.

Choosing Shards:

- By default Elastic Search provides a well suitable configuration by minimum tweaking.

- Index will be created with 5 shards and 1 Replica Shard.

Rules:

- We can change the replica dynamically by using update API . Replica change is dependant on our Hardware’s capability in our Cluster, this will help us to achieve fail over process on cluster.

- Once an index is created, we cannot change the shards. To remove the index there is a need of re-indexing the data again.

Why we cannot change shards count once we create index?

- Elastic Search has good reason & mechanism behind changing shards count. The following is the formula implemented by ES to route document to which shard in cluster.

shard = hash(routing) % number_of_primary_shards- Above formula explains why the number of primary shards can be set only when an index is created and never changed.

- If the number of primary shards ever get changed in the future, all previous routing values would be invalid and documents would never be found.

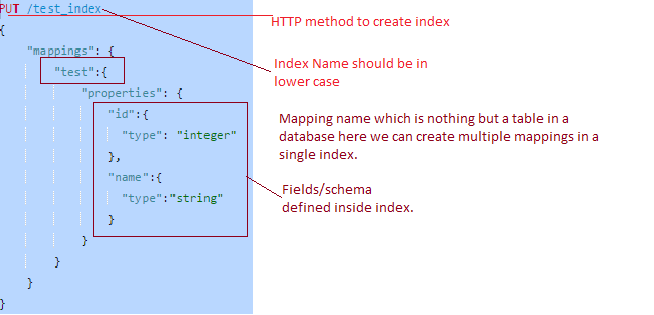

Creating Index:

Note: Index name should be in Lower case only. ES will not accept Upper case.

Creating sample Index:

To create index, we can use CURL script or sense tool chrome plugin.

Using Sense:

PUT/test_index{

"mappings": {

"test": {

"properties": {

"id": {

"type": "integer"

},

"name": {

"type": "string"

}

}

}

}

}

Index Anatomy:

Using CURL:

curl -XPUT "http://10.0.1.202:9200/test_index" -d’

{

"mappings": {

"test": {

"properties": {

"id": {

"type": "integer"

},

"name": {

"type": "string"

}

}

}

}

}

we can create index using CURL script with above command.

Note :we can create a shell “.sh” file we can execute using Linux commands it will also work fine.

Inserting sample data in to index:

POST/test_index/test{

"id": 1,

"name": "Ktree.com"

}

POST is the operation to insert data in to ES index using sense.

Once we insert the data into elastic search index the following is the response from server.

{

"_index": "test_index",

"_type": "test",

"_id": "AVHsQ3SfZ9plJTxmPDRx",

"_version": 1,

"created": true

}

Here highlighted “_id” field is a unique key in elastic search containing each record inserting in to the index which would help to find a record quickly in bigger indices.

Our suggestion is to choose a good key or number for _id field. While building any of your indices it prevents inserting any duplicates to elastic search.

Advantage of choosing good number (unique number) to _id field:

- we can perform search operations using that key.

- we delete record in index by that id exactly.

- Help us to update quickly.

Search Data in index:

We have lot of criteria in search of ES index based on our requirements. The following is the way to perform term search operations on a particular string fields.

(In Es we have flexibility to make a field full text search or not.This analyzed and not_analyzed will be impact on white space in our data , by default es analyzed property will be split data in to words to perform full text search)

The following are the properties we are using make this scenarios.

Analyzed: By default a search field is analyzed in elastic search index which will allow full text search.

Not_analyzed: will be helpful to prevent full-text search on a particular field.

GET/_search{

"filter": {

"term": {

"name": "ktree.com"

}

}

}

or a exact term which you want to search.

Delete index:

- we can delete mapping and we can.

Update Data :

- It is a best practice to update a particular record or record(s) using a unique field.

POST/test_index/test/AVHsUfrFZ9plJTxmPDRy{

"id": 12,

"name": "Ktree com com"

}

- Id is must to update a record in index (example: AVHsUfrFZ9plJTxmPDRy).

- In my small index I have done using a random id to the particular record so no problem exists here.

- If an index contains millions of records/docs, it will be helpful in that case.

- If a record got updated in index , it will change version of that record current updated record and new version record readily available for search & old one will be hidden.

Note:

Elastic search keys are case sensitive once created. If we are performing any data indexing with some wrong field rather than existing one (since es in NOSQL), it will insert the new field automatically to the schema.We can expect that it is indexing on desired field but when we search the data, we can understand what happened exactly.

Small example:

POST/test_index/test{

"id": 12,

"names": "Ktree com com"

}

instead of ” name ” field by mistake i have inserted using “names” field now the mapping changed like this.

{

"test_index": {

"mappings": {

"test": {

"properties": {

"id": {

"type": "integer"

},

"name": {

"type": "string"

},

"names": {

"type": "string"

}

}

}

}

}

}

}Anotherfield"names"isinsertedintoschemaanddataisassingedtonamesfieldnotnamefield.{

"_index": "test_index",

"_type": "test",

"_id": "AVHsb-LIZ9plJTxmPDR1",

"_score": 1,

"_source": {

"id": 13,

"names": "Ktree com com"

}

}Theaboverecordhasindexedwith"names"fieldifwedotermsearchonthisquery.GET/test_index/test/_search{

"filter": {

"term": {

"name": "ktree"

}

}

}

- The above record 13 will not come in term search field. so here comes the issue as this field is not indexed with name field too.

- The existing records will have data with names & some of then have data with names field which leads to data inconsistency and inconstant search operations.

- Again there is a need to delete entire index data -> recreate -> re-index the data.

- As it is a very costly operation, we need to be very careful while indexing data to existing mappings.

Best Practices:

Choosing data types for fields plays important role in memory consumption of fields:

- Since elastic search is noSQL it will allow explicit mappings created using API or from river.

- If the data is pushing to specific index & if index is not available, elastic search by default will create a new index with default types.

- If ES is created like that some of the field data types may not be suitable to our index requirements .In this case we need to choose correct data types in order to save our elastic search memory on fields.

- Let say my id requires a integer datatype but when elastic search has created mapping , It uses datatype “long”. In this case ”long” will occupy more memory compared to integer.

- It is always best practice to create index with suitable data types to save memory. To achieve this concept we can use custom templates for automatic index creation scenarios with specified schema by user.

Another Reason why we need User defined index?

- By Default elastic search will assign analyzed to string data types in elastic search schema. Some of the fields may require or disabling the full text searching.

- In this case custom index creation will be helpful, we can define which fields need to be involve in in full text search or not to use for full text search.