Demystifying DynamoDB: An In-Depth Guide to Navigating Through AWS’s Fast and Flexible NoSQL Database Service

Uncover the vast and potent world of Amazon DynamoDB with our comprehensive guide that sails you smoothly through its multifaceted capabilities.

Table of Contents

Introduction

Dynamodb

Amazon DynamoDB is a fully managed NoSQL database service provided by Amazon Web Services (AWS). It is designed to provide fast and predictable performance with seamless scalability for applications that require single-digit millisecond latency access to data. DynamoDB is known for its high availability, durability, and ease of use. Here are some key features and characteristics of AWS DynamoDB:

- Managed Service: DynamoDB is a fully managed service, which means AWS takes care of tasks like server provisioning, setup, configuration, patching, and backups. This allows developers to focus on building applications rather than managing database infrastructure.

- NoSQL Database: DynamoDB is a NoSQL database, and it offers flexible data models, including key-value and document data models. You can use it to store structured, semi-structured, or unstructured data.

- Scalability: DynamoDB is designed for seamless scalability. You can easily scale up or down to handle changes in traffic and data volume. It provides both manual and auto-scaling options.

- Performance: DynamoDB is optimized for low-latency performance. It uses techniques like solid-state drives (SSDs) and in-memory caching to ensure fast data access.

- Availability and Durability: DynamoDB is built for high availability and data durability. It replicates data across multiple Availability Zones within an AWS region to provide fault tolerance. Data is also automatically backed up and can be restored to any point in time.

- Security: DynamoDB provides fine-grained access control through AWS Identity and Access Management (IAM). You can control who can access your tables and what operations they can perform.

- Global Tables: DynamoDB offers Global Tables, which allow you to replicate data across multiple AWS regions for low-latency access from different geographic locations.

- Event-Driven Programming: You can use DynamoDB Streams to capture changes to your data and trigger AWS Lambda functions or other AWS services in response to those changes, enabling event-driven programming.

- On-Demand and Provisioned Capacity: DynamoDB offers both on-demand and provisioned capacity modes. On-demand mode automatically scales your table’s capacity based on actual usage, while provisioned mode allows you to specify and pay for the desired read and write capacity units.

- Backup and Restore: DynamoDB provides on-demand and continuous backups, as well as point-in-time recovery. You can easily create and manage backups of your data.

- Integration: DynamoDB integrates with various AWS services, including AWS Lambda, Amazon S3, AWS Glue, and AWS Step Functions, allowing you to build complex and serverless applications.

DynamoDB is commonly used for a wide range of applications, including web and mobile applications, gaming, IoT, content management systems, and more. Its managed nature, scalability, and low-latency performance make it a popular choice for developers looking to build highly available and responsive applications on AWS.

NOSQL /SQL Comparison

NoSQL and SQL are two different types of database management systems, each with its own strengths and weaknesses. Here’s a comparison of the two in various aspects:

- Data Model:

– SQL: SQL databases are relational databases that use structured query language (SQL) for defining and manipulating the data. They use tables to organize data into rows and columns with a predefined schema.

– NoSQL: NoSQL databases are non-relational and can handle structured, semi-structured, or unstructured data. They include various data models such as document-based, key-value, column-family, and graph databases. - Schema:

– SQL: SQL databases require a predefined schema, which means you must define the structure of your data before inserting it. Changes to the schema can be complex and may require downtime.

– NoSQL: NoSQL databases are typically schema-less or schema-flexible, allowing you to insert data without a predefined schema. This makes it easier to adapt to changing data requirements. - Query Language:

– SQL: SQL databases use a standardized query language (SQL) for data manipulation and querying. SQL provides powerful capabilities for complex queries and joins.

– NoSQL: NoSQL databases often have their own query languages or APIs, which can vary between different databases. Query capabilities may be more limited compared to SQL. - Scalability:

– SQL: SQL databases are typically scaled vertically (by adding more CPU, RAM, or storage to a single server) and can be challenging to scale horizontally across multiple servers.

– NoSQL: NoSQL databases are designed for horizontal scalability, allowing you to add more servers to distribute the load as your data and traffic increase. - ACID vs. BASE:

– SQL: SQL databases follow the ACID (Atomicity, Consistency, Isolation, Durability) properties, ensuring strong data consistency and reliability.

– NoSQL: NoSQL databases often follow the BASE (Basically Available, Soft state, Eventually consistent) model, which prioritizes availability and scalability over strict consistency. - Use Cases:

– SQL: SQL databases are well-suited for applications with structured data, complex queries, and strict data integrity requirements, such as financial systems, inventory management, and traditional relational applications.

– NoSQL: NoSQL databases excel in scenarios where flexibility, scalability, and fast data ingestion are crucial, such as content management systems, real-time analytics, IoT applications, and social media platforms. - Examples:

– SQL: MySQL, PostgreSQL, Oracle, Microsoft SQL Server

– NoSQL: MongoDB (document-based), Redis (key-value), Cassandra (column-family), Neo4j (graph)

It’s important to note that the choice between SQL and NoSQL databases depends on the specific requirements and constraints of your application. Many modern applications use a combination of both types of databases, often referred to as a polyglot persistence strategy, to leverage the strengths of each for different parts of the application.

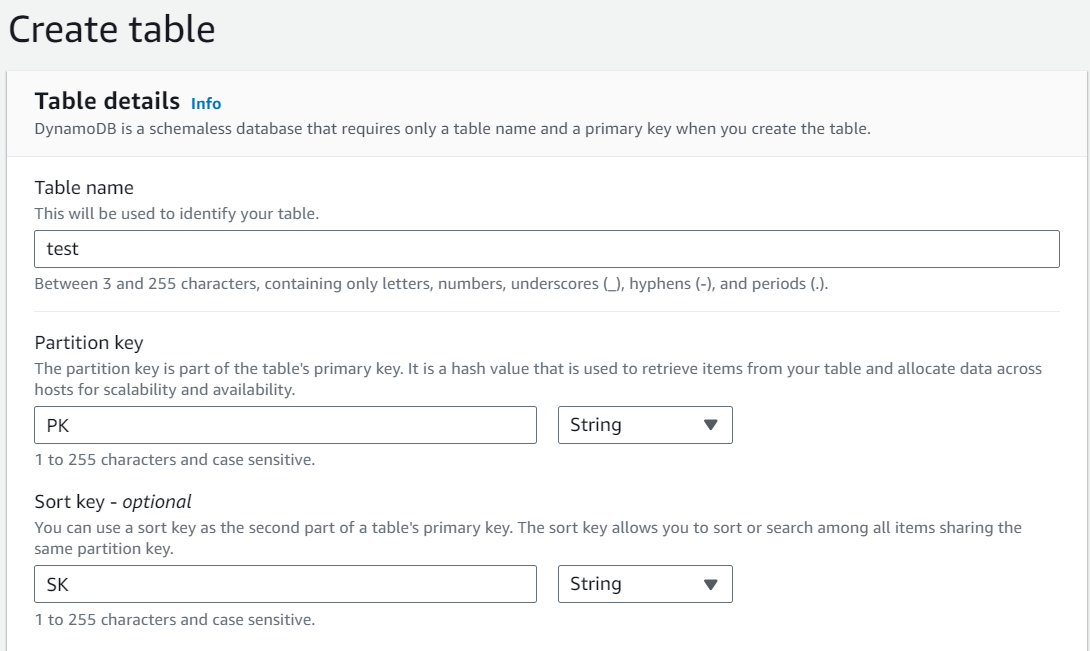

Tables & Naming Conventions

When working with Amazon DynamoDB, creating and naming tables is a fundamental aspect of database design. Properly naming your DynamoDB tables is important for clarity, consistency, and maintainability in your AWS environment. Here are some guidelines and best practices for DynamoDB table naming conventions:

- Use Descriptive Names: Choose table names that describe the data stored in the table. A well-chosen name makes it easier for you and your team to understand the purpose of the table. Avoid overly generic names like “Table1” or “DataStore.”

- Use CamelCase or SnakeCase: You can use camelCase or snake_case for table names, depending on your preference and team conventions. For example, “myTableName” or “my_table_name.”

- Prefix Tables (Optional): Some teams like to prefix their table names to indicate a project or application. This can be useful when you have multiple tables in the same AWS account for different purposes. For example, “myapp_users” or “projectname_orders.”

- Avoid Special Characters: Stick to alphanumeric characters (letters and numbers) in table names. Avoid using spaces, hyphens, or special characters as they can cause issues when working with DynamoDB programmatically.

- Case Sensitivity: Be aware that DynamoDB table names are case-sensitive, so “MyTable” and “mytable” would be considered as two different tables.

- Keep Names Short and Meaningful: While it’s important to be descriptive, overly long table names can become cumbersome. Aim for names that are both meaningful and concise.

- Consider Global Tables: If you plan to use DynamoDB Global Tables (which replicate data across multiple AWS regions), make sure your table name is unique across all the regions where you intend to create the table.

- Avoid Reserved Words: Be cautious of using words that are reserved keywords in DynamoDB, such as “table,” “item,” or “attribute.” While DynamoDB allows you to use these words, it’s best to avoid potential conflicts.

- Versioning (Optional): If your application undergoes significant changes and you need to maintain multiple versions of a table, consider including a version number in the table name (e.g., “myapp_v1_users” and “myapp_v2_users”).

- Consistency Across Environments: Maintain consistency in naming conventions across different environments (e.g., development, staging, production) to avoid confusion when managing tables in different contexts.

Here are a few examples of well-named DynamoDB tables following these conventions:

– “ecommerce_products”

– “user_profiles”

– “blog_comments”

– “inventory_data”

– “analytics_metrics”

Remember that good naming conventions contribute to the readability and maintainability of your DynamoDB tables, making it easier for you and your team to work with your database as your application evolves and grows.

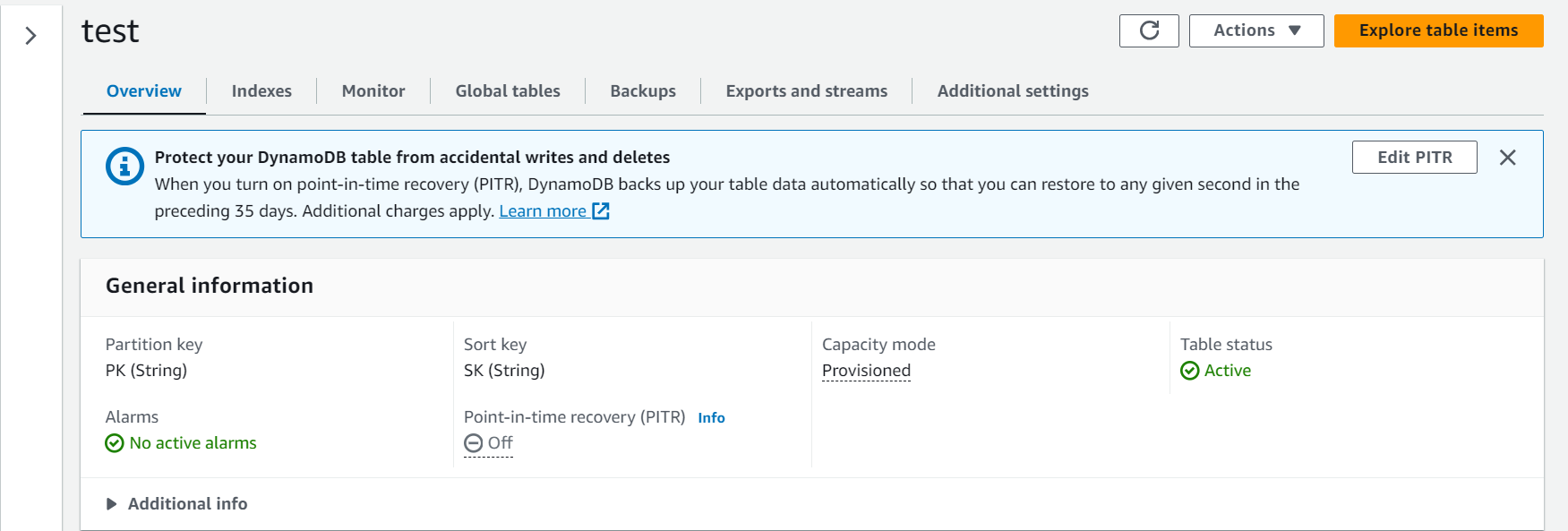

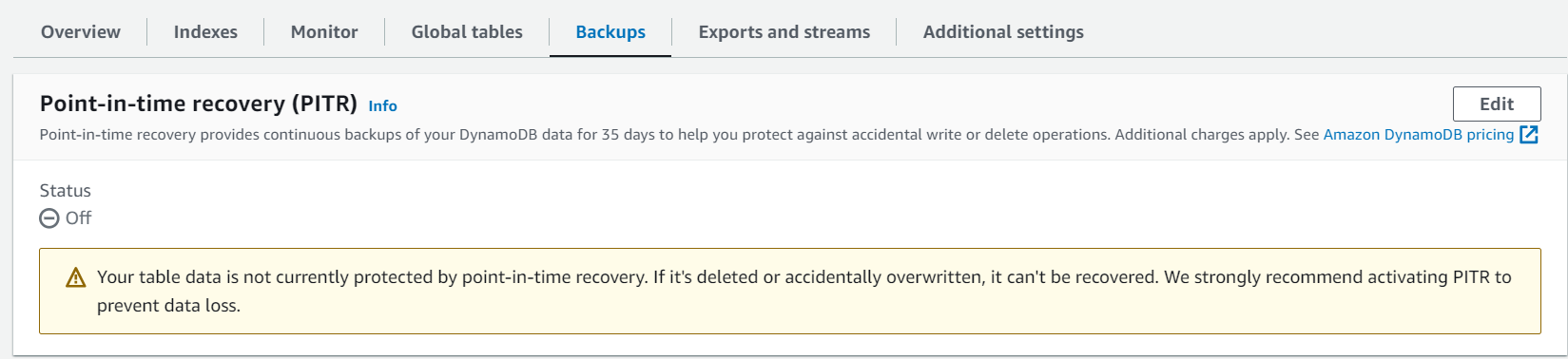

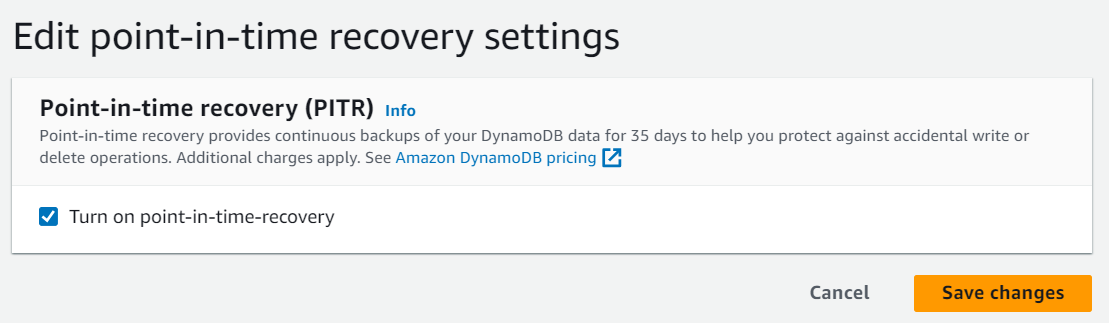

PITR

Amazon DynamoDB enables you to back up your table data continuously by using point-in-time recovery (PITR). When you enable PITR, DynamoDB backs up your table data automatically with per-second granularity so that you can restore to any given second in the preceding 35 days.

PITR helps protect you against accidental writes and deletes. For example, if a test script writes accidentally to a production DynamoDB table or someone mistakenly issues a “DeleteItem” call, PITR has you covered.

Using PITR, you can back up tables with hundreds of terabytes of data, with no impact on the performance or availability of your production applications. You also can recover PITR-enabled DynamoDB tables that were deleted in the preceding 35 days, and restore tables to their state just before they were deleted.

Datatypes

Amazon DynamoDB, as a NoSQL database, supports a variety of data types to accommodate different types of data and use cases. Understanding these data types is important for designing your database schema and working with your data effectively. Here are the primary data types supported by DynamoDB:

- Scalar Data Types:

– String: Represents a UTF-8 encoded sequence of characters. Strings are commonly used for attributes like names, descriptions, and labels.

– Number: Represents a number, either as a string (e.g., “123.45”) or as a number (e.g., 123.45). DynamoDB stores numbers in binary form for efficient processing.

– Binary: Represents binary data, such as images, audio files, or serialized data. You can store binary data in base64-encoded strings.

– Boolean: Represents either true or false values.

– Null: Represents an attribute that has no value. It’s different from an empty string or an attribute that doesn’t exist. - Set Data Types:

– DynamoDB also supports sets for string, number, and binary data types. Sets are unordered collections of unique values. Sets come in three varieties:

– String Set: A set of string values.

– Number Set: A set of number values.

– Binary Set: A set of binary values. - Document Data Type:

– Map: Represents a nested JSON-like object within an attribute. Maps are used to store structured data with multiple attributes. Each attribute within a map can have its own data type.

– List: Represents an ordered list of values. Lists can contain elements of different data types, and they can be nested to create complex structures. - Special Data Types:

– String (S): A scalar string.

– Number (N): A scalar number.

– Binary (B): A scalar binary data.

– Boolean (BOOL): A scalar Boolean.

– Null (NULL): A scalar null value.

– Map (M): A document data type that represents a map.

– List (L): A document data type that represents a list.

– String Set (SS): A set of strings.

– Number Set (NS): A set of numbers.

– Binary Set (BS): A set of binary values.

When creating a DynamoDB table, you need to specify the data types of your attributes in the table schema. Additionally, when interacting with DynamoDB using AWS SDKs or APIs, you’ll need to provide data in the appropriate format based on these data types.

It’s important to choose the right data type for each attribute to ensure efficient storage and querying. For example, use numbers for numerical data, strings for text, and sets when you need to store multiple unique values in a single attribute. Document data types like maps and lists are useful when you have complex and hierarchical data structures.

DynamoDB’s flexibility in supporting various data types allows you to model your data in a way that best fits your application’s requirements, making it a versatile choice for a wide range of use cases.

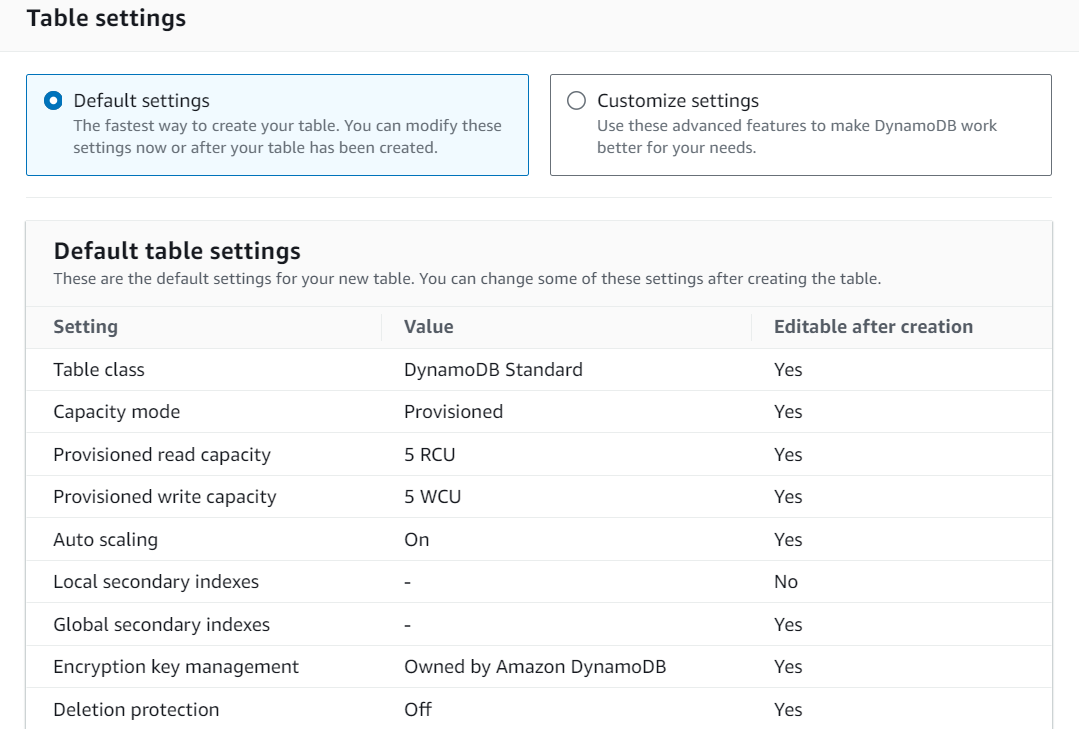

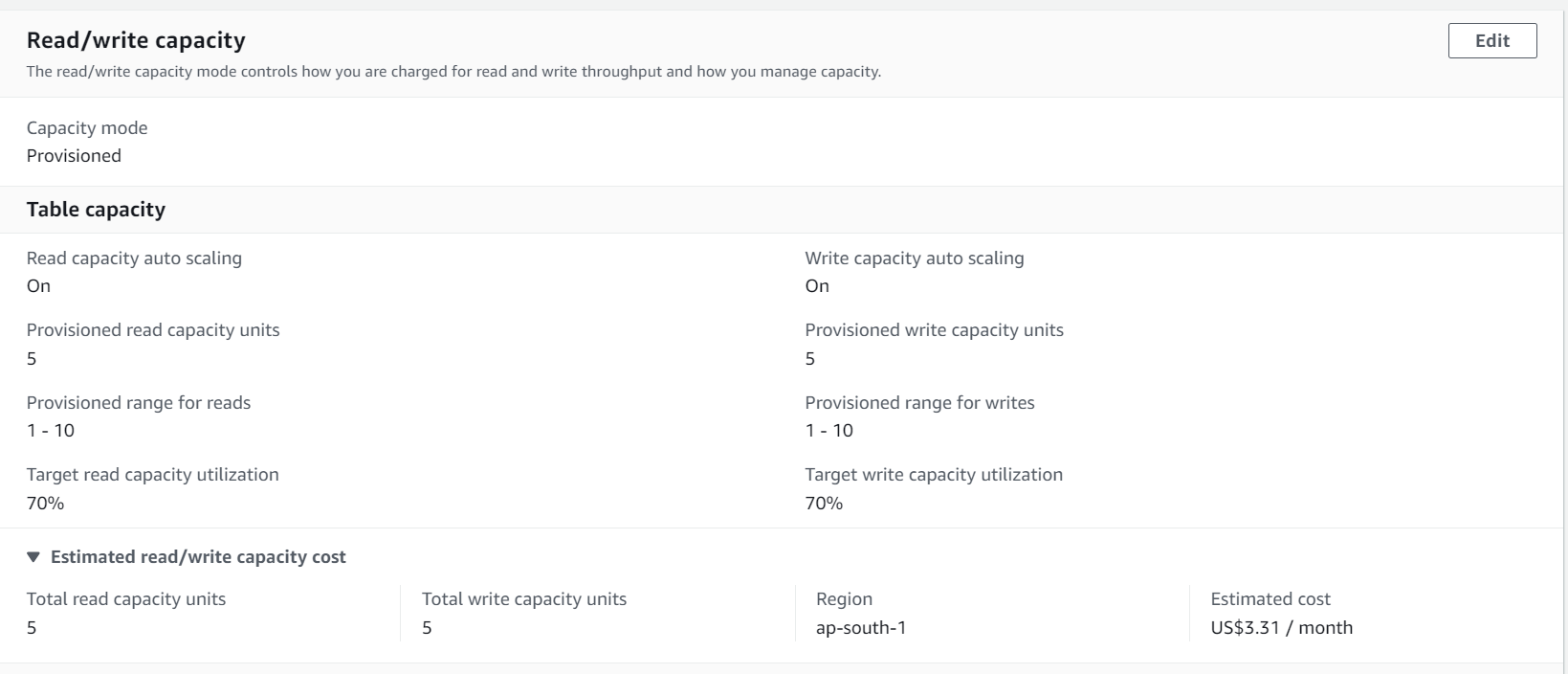

Capacity Units

Amazon DynamoDB, a managed NoSQL database service by Amazon Web Services (AWS), uses a capacity unit model to allocate and manage the resources required for reading and writing data. This model helps you provision the necessary resources to meet the performance needs of your applications. There are two types of capacity units in DynamoDB: Read Capacity Units (RCUs) and Write Capacity Units (WCUs).

- Read Capacity Units (RCUs):

– RCUs represent the capacity required for reading data from DynamoDB tables.

– One RCU is equivalent to reading one item per second, with a maximum item size of 4 KB.

– If you need to read an item larger than 4 KB, you’ll need to provision additional RCUs based on the item size. For example, reading an 8 KB item would consume 2 RCUs.

– DynamoDB offers two types of read consistency: eventual consistency and strong consistency. Strong consistency ensures that you always read the latest data after a write. However, strong consistency consumes twice as many RCUs as eventual consistency.

– You can specify the read consistency mode (either “eventual” or “strong”) when making read requests. - Write Capacity Units (WCUs):

– WCUs represent the capacity required for writing data to DynamoDB tables.

– One WCU is equivalent to writing one item per second, with a maximum item size of 1 KB.

– Similar to RCUs, if you need to write larger items, you must provision additional WCUs based on the item size.

– DynamoDB supports batch writes, allowing you to write multiple items in a single operation. Batch writes consume WCUs equal to the number of items in the batch.

– Conditional writes, which allow you to write an item only if specific conditions are met, consume the same number of WCUs as regular writes.

When you create a DynamoDB table, you are required to specify the provisioned capacity in terms of RCUs and WCUs. The amount of provisioned capacity should be based on your expected read and write workloads. It’s essential to monitor your application’s usage and adjust the provisioned capacity as needed to ensure optimal performance.

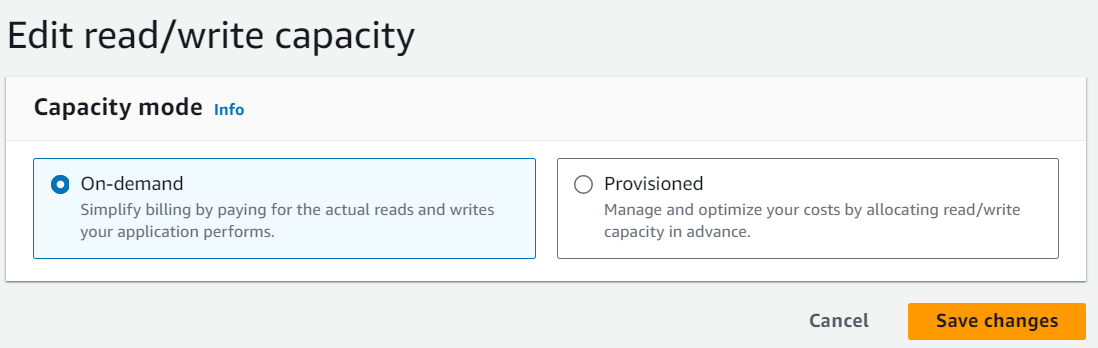

DynamoDB also offers two other capacity modes:

- On-Demand Capacity Mode:

– In this mode, you don’t need to specify RCUs and WCUs manually. You pay for the read and write capacity you actually consume. This mode is suitable for applications with varying workloads or if you prefer not to manage capacity provisioning.

- Auto-Scaling:

– DynamoDB supports auto-scaling, which automatically adjusts the provisioned capacity up or down based on your actual usage. It helps maintain consistent performance without manual intervention.

Properly managing capacity units is crucial for achieving the desired performance and cost-effectiveness in DynamoDB. Whether you provision capacity manually, use on-demand mode, or employ auto-scaling, understanding your application’s workload and monitoring usage will help you optimize the resource allocation for your DynamoDB tables.

Dynamodb On-demand Capacity

Amazon DynamoDB On-Demand Capacity Mode is a billing option that eliminates the need for manual provisioning of Read Capacity Units (RCUs) and Write Capacity Units (WCUs) in your DynamoDB tables. Instead, you pay only for the read and write requests that you actually perform, without specifying or preallocating capacity. This can be a convenient and cost-effective option for applications with variable workloads or for those that want to simplify capacity management.

Here are some key details about DynamoDB On-Demand Capacity Mode:

- Pay-Per-Request Pricing: With On-Demand Capacity Mode, you are billed based on the actual read and write requests you make to your DynamoDB tables. There is no need to estimate or provision capacity units in advance.

- Automatic Scaling: DynamoDB automatically scales your table’s capacity to accommodate the varying workload. It can handle spikes in traffic without manual intervention, ensuring your application maintains consistent performance.

- Cost-Efficiency: On-Demand Capacity Mode can be cost-effective for workloads with unpredictable or bursty traffic patterns. You don’t pay for unused capacity during low-traffic periods, which can result in cost savings.

- No Additional Charges: In addition to paying only for the actual requests, you are not charged separately for on-demand capacity. There are no upfront or ongoing costs associated with provisioning and managing capacity units.

Here’s an example to illustrate how DynamoDB On-Demand Capacity Mode works:

Suppose you have an e-commerce application with a product catalog stored in a DynamoDB table. During normal business hours, the application experiences a high volume of traffic as users browse and search for products. However, late at night and during weekends, traffic drops significantly.

With On-Demand Capacity Mode:

– During peak hours, when the application receives a high number of read and write requests, DynamoDB automatically scales up the capacity to handle the increased load. You are billed for the actual requests made during this time.

– During off-peak hours, when traffic is minimal, DynamoDB scales down the capacity, and you are only billed for the occasional maintenance or administrative requests.

This flexibility allows you to ensure your application’s performance during busy periods while minimizing costs during idle times.

To switch your DynamoDB table to On-Demand Capacity Mode, you can simply update the table’s capacity settings using the AWS Management Console, AWS CLI, or an SDK. For example, using the AWS CLI, you can modify a table’s capacity mode like this:

“`bash

aws dynamodb update-table –table-name YourTableName –billing-mode PAY_PER_REQUEST

“`

It’s important to note that while On-Demand Capacity Mode simplifies capacity management, it might not be the most cost-effective choice for all workloads. For predictable, high-traffic workloads, you may find it more economical to use provisioned capacity with RCUs and WCUs. You should carefully analyze your application’s traffic patterns and choose the capacity mode that best fits your requirements and budget.

Dynamodb Partitions

Amazon DynamoDB uses a distributed storage architecture with partitions to ensure scalability, high availability, and low latency. Understanding how DynamoDB partitions data is crucial for designing efficient and performant database schemas. Here’s an in-depth look at DynamoDB partitions:

- What is a Partition in DynamoDB?

– A partition in DynamoDB is a physical storage unit that holds a portion of a table’s data.

– Each partition is associated with a specific amount of storage and provisioned throughput capacity (measured in Read Capacity Units and Write Capacity Units).

– DynamoDB uses partitions to distribute and manage the data across multiple servers and storage devices for horizontal scalability. - How Data is Partitioned:

– DynamoDB partitions data based on the partition key, which is specified when creating a table.

– The partition key is a specific attribute of each item in the table.

– DynamoDB hashes the partition key value and uses the hash value to determine the partition where an item will be stored.

– The goal is to evenly distribute data across partitions to ensure uniform performance and avoid hot partitions (partitions with disproportionately high activity). - Importance of Choosing the Right Partition Key:

– Selecting the right partition key is a critical design decision in DynamoDB.

– A well-chosen partition key ensures that data is distributed evenly across partitions, minimizing the risk of uneven workload distribution.

– Poorly chosen partition keys can result in hot partitions, where a single partition receives a disproportionate amount of read or write activity, potentially leading to performance bottlenecks. - Partition Size:

– Each partition can store a maximum of 10 GB of data.

– If a partition exceeds this limit, DynamoDB automatically splits it into smaller partitions to maintain efficient performance. - Provisioned Throughput per Partition:

– The provisioned throughput capacity you specify for your table (in terms of RCUs and WCUs) is allocated per partition.

– For example, if you provision 1,000 RCUs and 1,000 WCUs for a table with three partitions, each partition will have 333.33 RCUs and 333.33 WCUs of capacity. - Query Performance and Partitions:

– Query performance in DynamoDB is closely tied to the partition key.

– Queries that target a specific partition key or utilize secondary indexes can be highly efficient because they operate within a single partition.

– Queries that scan multiple partitions may consume more capacity and take longer to execute. - Monitoring and Managing Partitions:

– DynamoDB provides metrics and tools for monitoring the performance and health of partitions.

– You can use CloudWatch metrics to track the number of consumed RCUs and WCUs per partition.

– To mitigate hot partitions, you may need to redesign your partition key, use partition key prefixes, or implement strategies like sharding.

In summary, DynamoDB partitions data based on the partition key, and understanding how this works is crucial for achieving optimal performance and scalability. Properly chosen partition keys and careful consideration of access patterns can help you design efficient and well-distributed DynamoDB tables. Monitoring and tuning your table’s performance, especially when dealing with large datasets, is an ongoing process to ensure your application’s responsiveness.

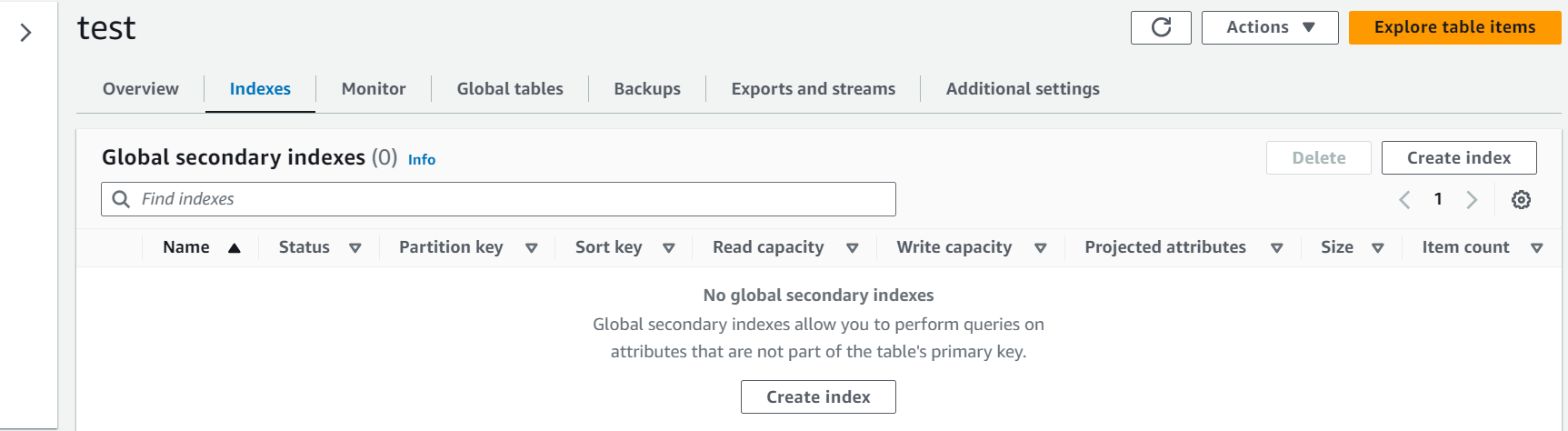

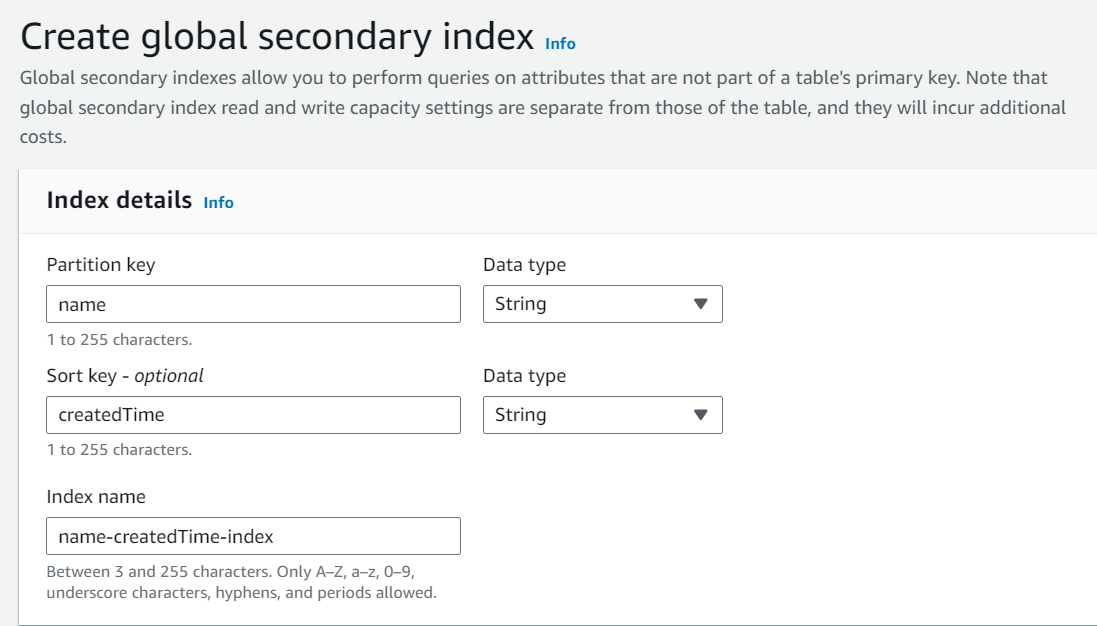

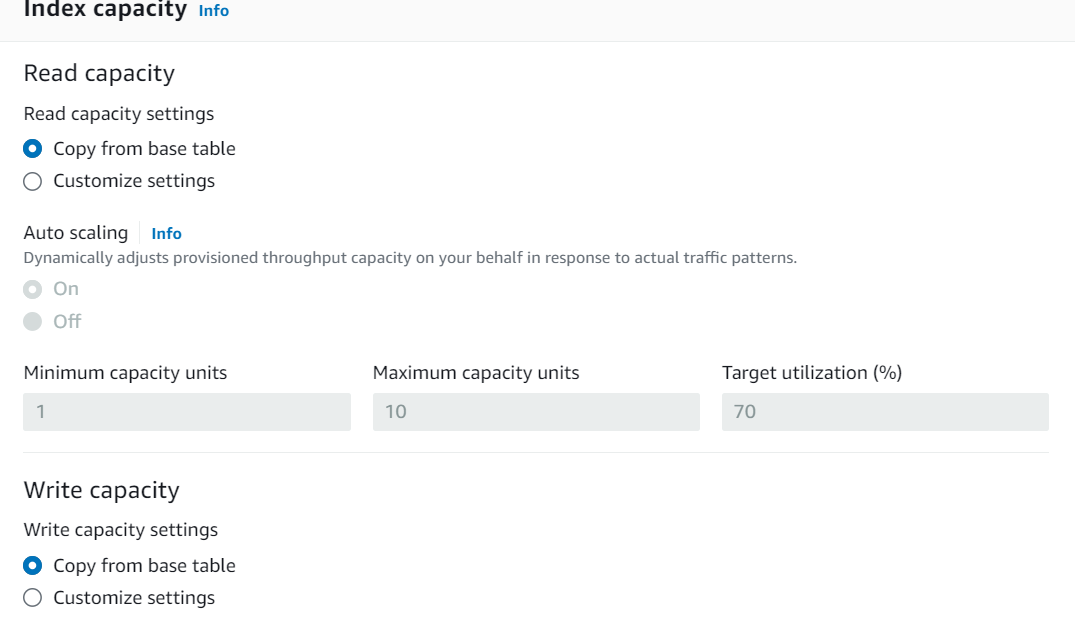

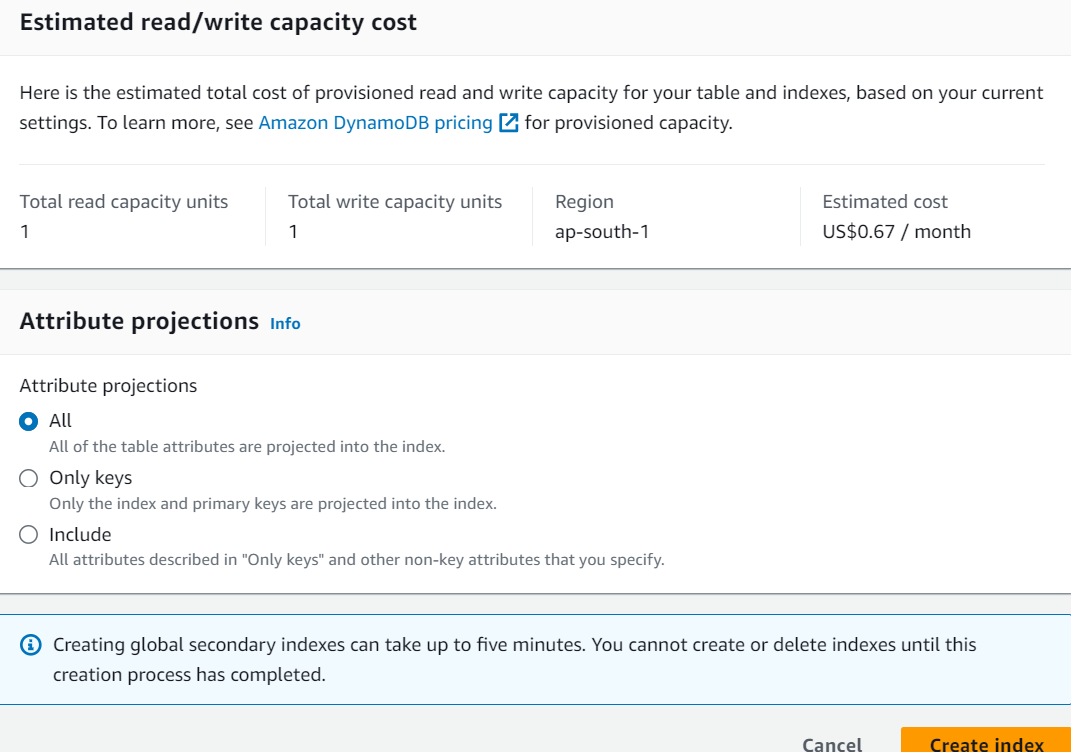

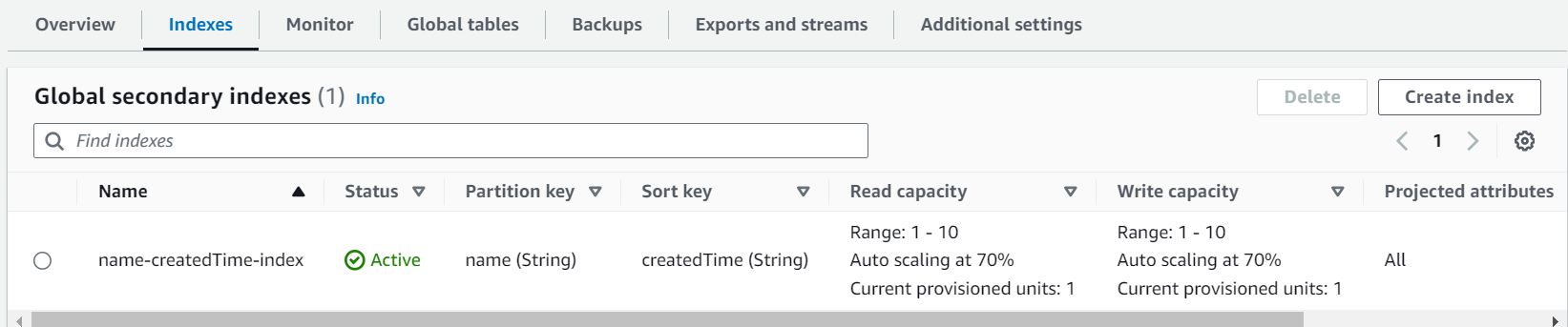

Global Secondary Index

Amazon DynamoDB Global Secondary Indexes (GSI) are a powerful feature that enables efficient querying of data in DynamoDB tables using alternate keys other than the primary key. GSIs provide flexibility in data retrieval, allowing you to perform queries and scans on attributes that are not part of the table’s primary key. Here’s a detailed explanation of DynamoDB Global Secondary Indexes with examples:

- Purpose of Global Secondary Indexes:

– DynamoDB tables are typically designed with a primary key consisting of a partition key and an optional sort key.

– GSIs allow you to define alternative keys, separate from the primary key, to create different access patterns for your data.

– This enables efficient querying on attributes that are not part of the primary key and supports use cases where you need to retrieve data based on various criteria. - Anatomy of a Global Secondary Index:

– A GSI consists of one or more non-key attributes that you want to query, along with an index key schema.

– The index key schema includes a partition key and an optional sort key.

– The partition key of the GSI doesn’t need to be the same as the table’s primary key, but it must be an attribute in the table.

– GSIs are eventually consistent, which means there may be a slight delay in reflecting changes from the table to the GSI. - Example of Using a Global Secondary Index:

– Suppose you have a DynamoDB table called “Employee” with a primary key composed of “EmployeeID” (partition key) and “LastName” (sort key).

– If you often need to query employees by their “Department” attribute, you can create a GSI with the partition key set to “Department” and an optional sort key based on your query requirements. - Querying with Global Secondary Indexes:

– Once a GSI is created, you can perform queries on it using the `Query` operation.

– For example, with the GSI mentioned above, you can query all employees in the “HR” department by specifying the department value as the partition key in the query. - Benefits of Global Secondary Indexes:

– Improved query performance: GSIs can significantly speed up queries, especially for complex query patterns.

– Reduced table scans: Without GSIs, you might need to perform full table scans to filter data based on non-primary key attributes.

– Flexibility: GSIs allow you to adapt your database schema to evolving application requirements without modifying the primary key. - Costs and Considerations:

– Creating GSIs incurs additional storage costs and may affect write performance since updates must be propagated to the GSI.

– Careful consideration of access patterns and query requirements is essential when designing GSIs to avoid over-provisioning or underutilization.

Local Secondary Index

Amazon DynamoDB Local Secondary Indexes (LSI) are a feature that allows you to efficiently query data in DynamoDB tables using an alternate sort key, in addition to the primary key. Unlike Global Secondary Indexes (GSI), which provide flexibility to query on different attributes independently, LSI is specific to a single table and uses the same partition key as the primary key. Here’s a detailed explanation of DynamoDB Local Secondary Indexes:

- Purpose of Local Secondary Indexes:

– Local Secondary Indexes enable efficient querying of data based on a sort key attribute that is different from the primary key’s sort key.

– They are useful when you need to retrieve data in a way that is organized differently than the table’s primary key. - Anatomy of a Local Secondary Index:

– An LSI is created when you create the DynamoDB table.

– It uses the same partition key as the table’s primary key but allows you to specify a different sort key.

– LSIs share the same provisioned throughput as the table, so there’s no additional cost for read/write capacity. - Benefits of Local Secondary Indexes:

– Improved query performance: LSIs allow efficient queries on a specific range of sort key values.

– Reduced table scans: Without LSIs, you might need to perform full table scans to filter data based on non-primary key sort key attributes.

– Cost-effective: LSIs don’t incur additional costs for read or write capacity provisioning. - Limitations and Considerations:

– LSIs are specific to the table in which they are defined, unlike GSIs, which can be used across multiple tables.

– You can create up to five LSIs per table.

– Changes to LSIs (creation, modification, or deletion) can only be done during table creation or by deleting and recreating the table.

– Careful planning is required when designing LSIs to ensure they meet your query requirements without overcomplicating your table schema.

DynamoDB Local Secondary Indexes provide flexibility for tailored queries within the context of a single table, making them a valuable tool for optimizing data retrieval in your applications.

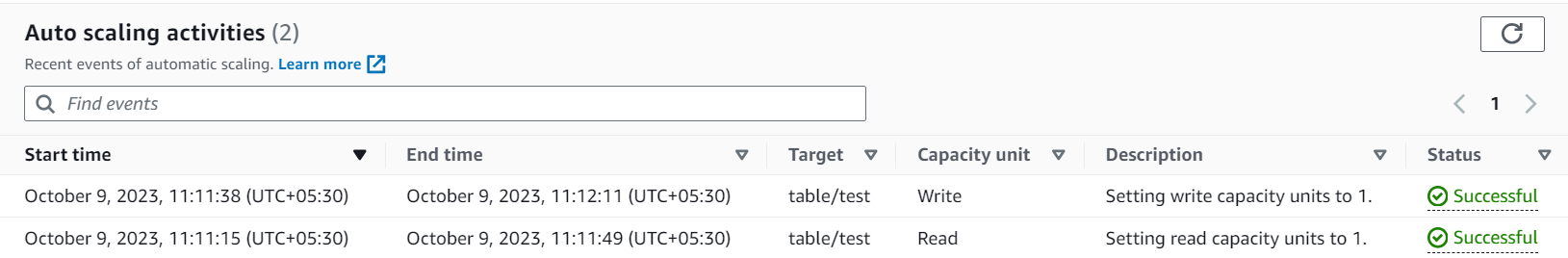

Autoscaling

Amazon DynamoDB Auto Scaling is a feature that allows you to automatically adjust the provisioned read and write capacity of your DynamoDB tables in response to changes in your application’s traffic patterns. Auto Scaling helps ensure that your tables can handle varying workloads without manual intervention while optimizing cost efficiency. Here’s a detailed explanation of DynamoDB Auto Scaling:

- Purpose of Auto Scaling:

– DynamoDB Auto Scaling helps you maintain consistent database performance by automatically adjusting read and write capacity to meet the demands of your application’s workloads.

– It eliminates the need for manual provisioning and capacity management, reducing the risk of over-provisioning or under-provisioning. - How Auto Scaling Works:

– Auto Scaling continuously monitors the consumption of read and write capacity units (RCUs and WCUs) in your DynamoDB table.

– When consumption approaches the specified thresholds (minimum or maximum capacity), Auto Scaling triggers scaling actions to adjust the provisioned capacity.

– Scaling actions involve increasing or decreasing the provisioned capacity by a predefined increment. - Configuration Parameters:

– When configuring Auto Scaling, you need to specify the following parameters:

– Target Utilization: The desired percentage of consumed capacity units at which Auto Scaling should maintain your table’s provisioned capacity. Typically, this is set to a value between 70% and 90%.

– Minimum and Maximum Capacity: The minimum and maximum RCUs and WCUs that Auto Scaling can provision for your table.

– Scaling Policy: A scaling policy that defines the increment to use when scaling up or down and how quickly Auto Scaling should react to changes in traffic. - Scaling Actions:

– Scaling actions can be triggered in response to changes in the workload:

– Scale In: Reducing provisioned capacity when consumption is consistently below the target utilization.

– Scale Out: Increasing provisioned capacity when consumption is consistently above the target utilization.

– Auto Scaling can also perform both scale in and scale out actions for read and write capacity independently. - Integration with AWS CloudWatch:

– Auto Scaling leverages Amazon CloudWatch to monitor your table’s capacity consumption and trigger scaling actions.

– You can view Auto Scaling-related metrics and configure CloudWatch alarms to send notifications when Auto Scaling actions occur. - Example Scenario:

– Suppose you have a DynamoDB table used by an e-commerce application.

– During holidays or special events, your application experiences a surge in traffic, resulting in higher read and write demands.

– With Auto Scaling, you can set up scaling policies to automatically increase the provisioned capacity when traffic spikes and decrease it during low-traffic periods. - Cost Efficiency:

– Auto Scaling helps optimize costs by ensuring that you only pay for the capacity you consume.

– During low-traffic periods, Auto Scaling can reduce provisioned capacity, resulting in cost savings. - Enabling and Disabling Auto Scaling:

– You can enable Auto Scaling when creating a DynamoDB table or modify an existing table to enable Auto Scaling.

– Disabling Auto Scaling stops automatic capacity adjustments but retains the provisioned capacity settings.

DynamoDB Auto Scaling is a valuable feature for applications with variable workloads, as it ensures that your tables can handle changes in demand without manual intervention. By configuring Auto Scaling properly, you can maintain a responsive and cost-effective database infrastructure.

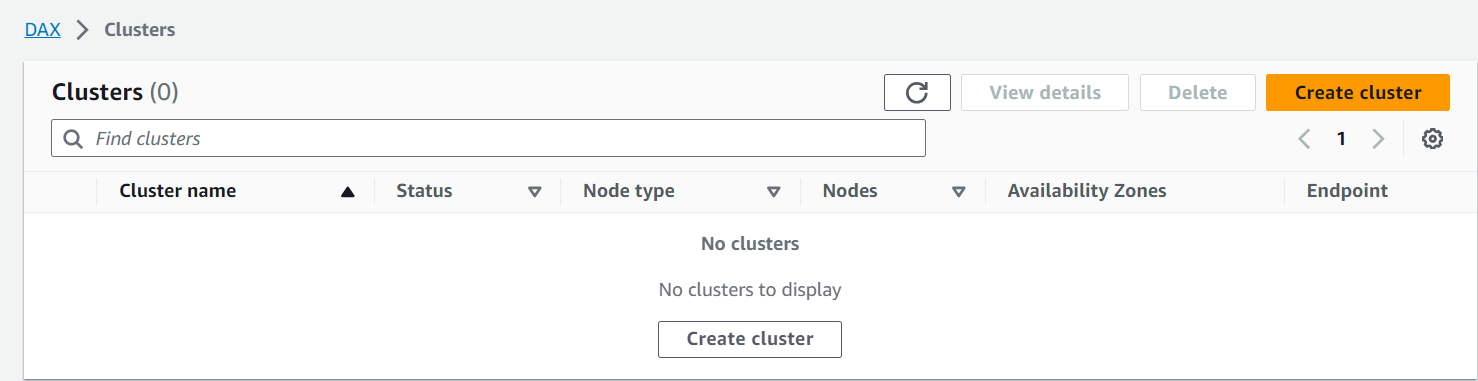

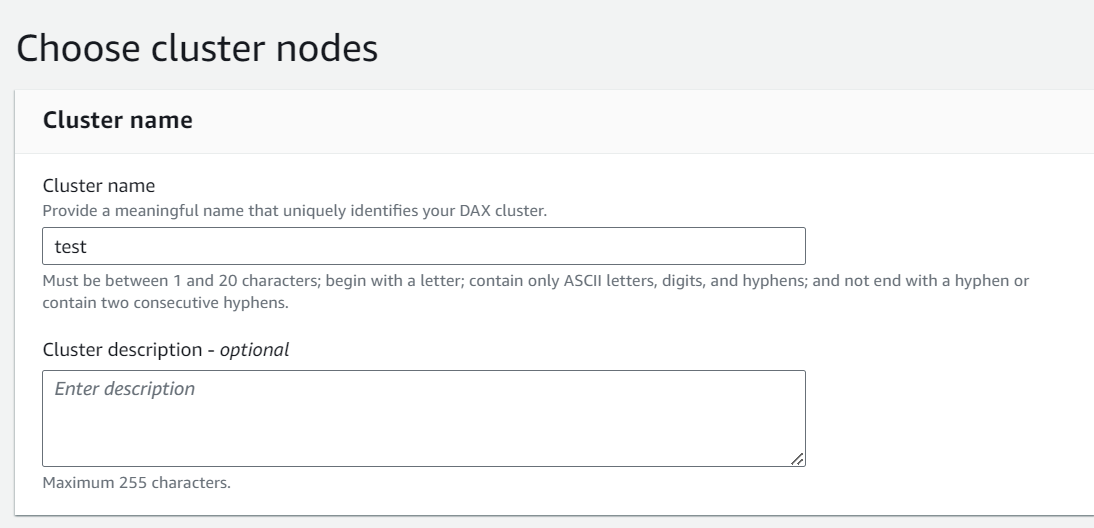

Dynamodb Accelerator

DynamoDB Accelerator (DAX) is a fully managed, highly available, and highly scalable in-memory cache for Amazon DynamoDB. DAX is designed to accelerate read-intensive workloads on DynamoDB by providing sub-millisecond response times for cached queries. It allows you to improve the performance of your applications without modifying your existing DynamoDB queries or code. Here’s a detailed explanation of DynamoDB Accelerator (DAX):

- Purpose of DAX:

– DAX is designed to address the latency challenges that can be associated with read-heavy DynamoDB workloads.

– It serves as a cache layer between your application and DynamoDB, storing frequently accessed data in memory for rapid retrieval. - Key Features of DAX:

– In-Memory Cache: DAX stores data in memory, providing low-latency access to frequently accessed data.

– Automatic Cache Management: DAX automatically manages cache eviction, refresh, and data consistency to ensure accurate and up-to-date results.

– Compatibility: DAX is fully compatible with existing DynamoDB SDKs and APIs, requiring minimal code changes to implement.

– High Availability: DAX is designed for high availability and fault tolerance, with multiple Availability Zones and automatic failover.

– Security: DAX supports encryption at rest and in transit, ensuring data security. - How DAX Works:

– When you enable DAX for a DynamoDB table, DAX automatically creates a cache cluster associated with the table.

– DAX intercepts read requests to the table and checks if the requested data is present in the cache.

– If the data is in the cache, DAX serves it directly to the application, providing sub-millisecond response times.

– If the data is not in the cache, DAX retrieves it from DynamoDB, updates the cache, and then serves it to the application.

– DAX manages cache eviction policies and ensures data consistency between the cache and DynamoDB. - Use Cases for DAX:

– DAX is particularly beneficial for applications that require low-latency access to DynamoDB data, such as real-time analytics, gaming leaderboards, and high-traffic web applications.

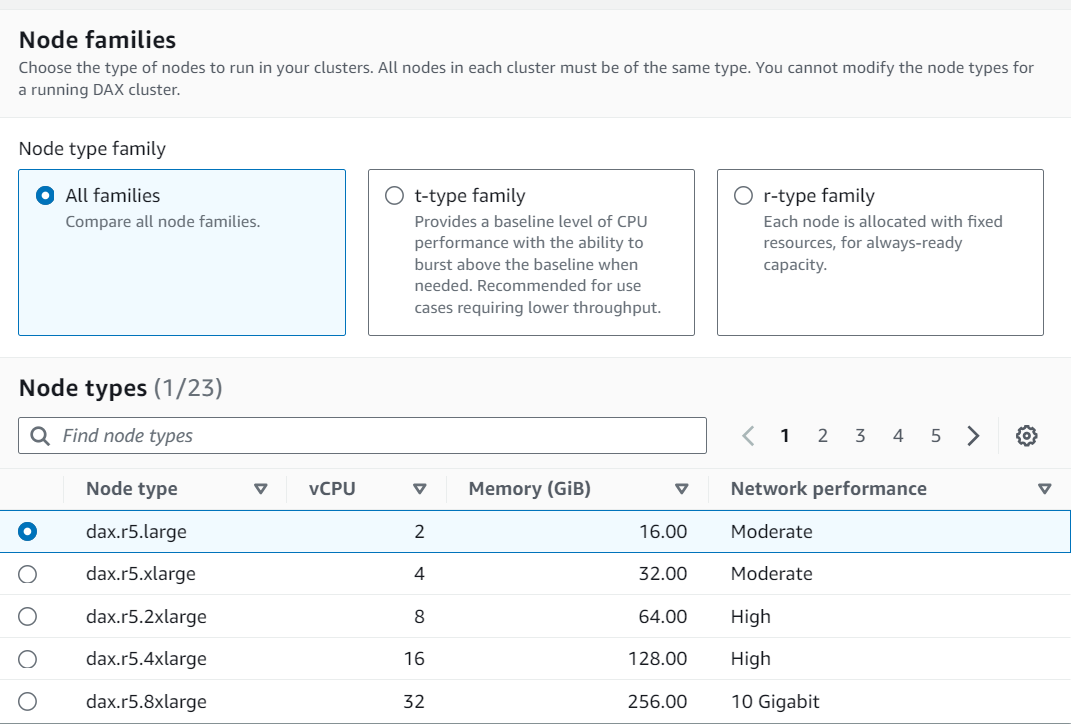

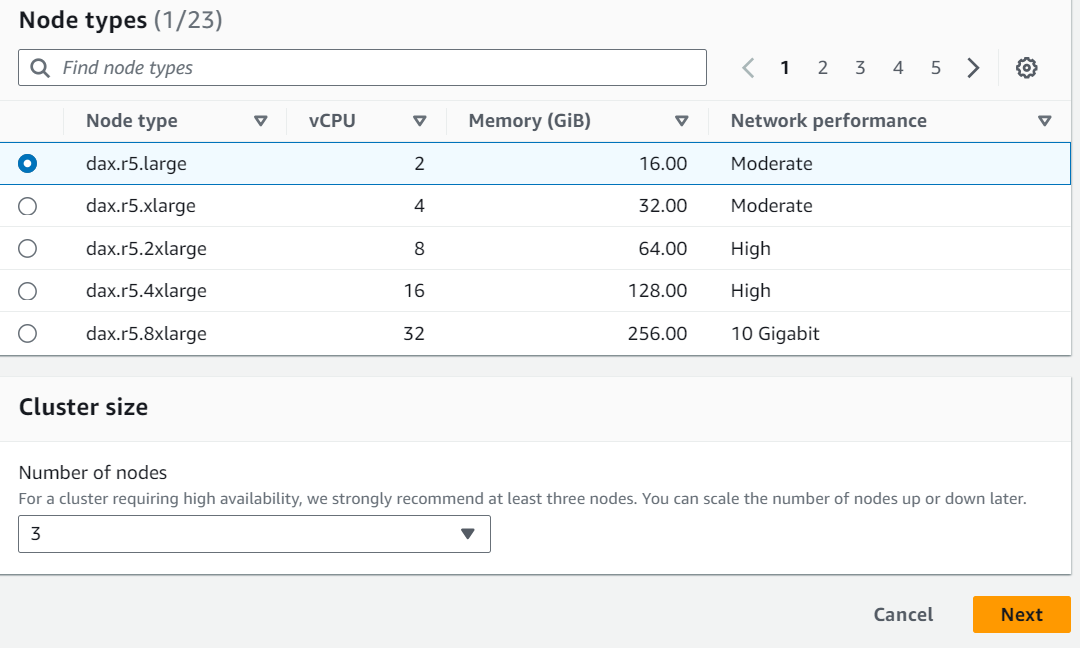

– It can accelerate read-heavy workloads without overprovisioning DynamoDB read capacity units (RCUs). - Configuration and Setup:

– To use DAX, you need to create a DAX cluster and associate it with your DynamoDB table.

– You can configure the cluster size based on your application’s needs.

– You must update your application’s code to specify the DAX endpoint when making DynamoDB requests. - Cost Considerations:

– DAX has its own pricing structure, separate from DynamoDB. You pay for the size of the DAX cluster and the number of nodes.

– Careful consideration of the cache size and query patterns is essential to optimize costs and performance. - Example Scenario:

– Consider an e-commerce application that needs to display product details to users.

– With DAX, frequently accessed product data, such as product descriptions, images, and prices, can be cached for sub-millisecond retrieval, resulting in a faster user experience.

DynamoDB Accelerator (DAX) is a valuable tool for optimizing the performance of read-heavy DynamoDB workloads. By seamlessly integrating DAX into your application architecture, you can achieve low-latency access to your DynamoDB data without the complexity of managing an additional caching layer.

Dynamodb Streams and Triggers with AWS Lambda

Amazon DynamoDB Streams and AWS Lambda are powerful AWS services that, when used together, provide a way to react to changes in your DynamoDB tables in real-time and trigger custom actions. In this detailed explanation, I’ll cover DynamoDB Streams, AWS Lambda, and how they work together with examples and use cases.

DynamoDB Streams:

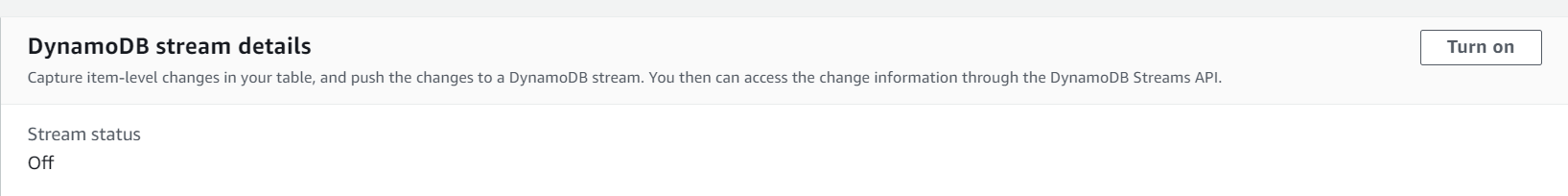

DynamoDB Streams is a feature of Amazon DynamoDB that captures a time-ordered sequence of item-level modifications in your tables. Each modification is recorded as an event in the stream, and these events can include inserts, updates, and deletes.

Key Concepts of DynamoDB Streams:

- Stream Records: Each event in a DynamoDB Stream is represented as a stream record. It includes information about the type of change (insert, update, or delete), the affected item’s data, and a sequence number.

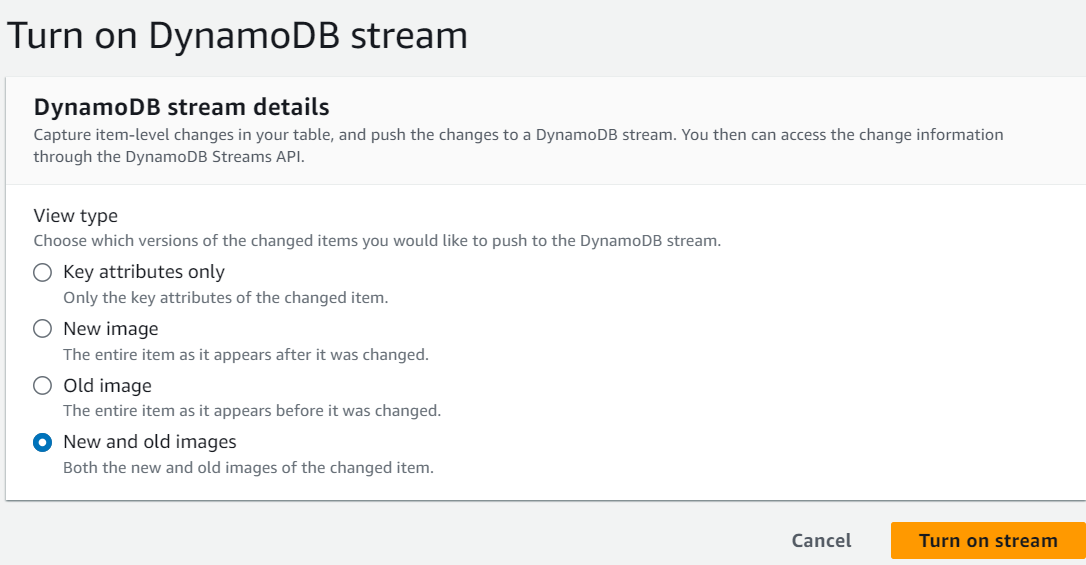

- Stream View Types: DynamoDB Streams supports two stream view types:

– NEW_IMAGE: This view type includes the new version of the modified item, showing how the item looks after the change.

– OLD_IMAGE: This view type includes the old version of the modified item, showing how the item looked before the change. - Time Ordering: Stream records are maintained in the order they occur, ensuring that you can process events in the order they happened.

- Retention: Stream records are retained for a configurable duration, allowing you to access historical data.

AWS Lambda:

AWS Lambda is a serverless compute service that allows you to run code in response to various events without provisioning or managing servers. It’s a great fit for processing DynamoDB Streams because it can be triggered by stream events.

Key Concepts of AWS Lambda:

- Functions: AWS Lambda functions are units of code that can be triggered by various AWS services, including DynamoDB Streams.

- Event Sources: DynamoDB Streams can be configured as an event source for an AWS Lambda function, allowing it to automatically trigger the function when new stream records are added.

- Execution Environment: AWS Lambda automatically provisions and manages the execution environment for your functions, ensuring high availability and scalability.

How DynamoDB Streams and AWS Lambda Work Together:

- Configuration: You configure a DynamoDB Stream on a table of interest. When you enable the stream, you specify the stream view type (NEW_IMAGE, OLD_IMAGE, or BOTH).

- Lambda Trigger: You configure an AWS Lambda function to be triggered by the DynamoDB Stream. When a change occurs in the table (e.g., an item is inserted, updated, or deleted), a stream record is generated.

- Event Processing: The Lambda function is automatically invoked by AWS Lambda in response to new stream records. The function receives the stream record as an event and can process the data or trigger other actions based on the event.

Example Use Cases:

- Real-time Analytics: You can use DynamoDB Streams and AWS Lambda to capture changes in a DynamoDB table and aggregate statistics in real-time, such as counting the number of items in a specific category.

- Data Replication: You can replicate data from a DynamoDB table to another data store (e.g., Elasticsearch or another database) by processing stream records with AWS Lambda.

- Automated Alerts: Trigger notifications or alerts when specific events occur in your DynamoDB table, such as notifying users when their account balance falls below a certain threshold.

- Audit Trails: Maintain an audit trail of all changes made to your data by storing stream records in a separate audit log.

- Materialized Views: Update materialized views in response to changes in your DynamoDB table, allowing for optimized querying.

Best Practices and Considerations:

– Ensure that your Lambda function code is idempotent because it can be retried in case of errors.

– Consider error handling and dead-letter queues to manage failed Lambda invocations.

– Be mindful of concurrency limits and scaling behavior when dealing with high-velocity streams.

– Monitor your DynamoDB Streams and Lambda function for performance and cost optimization.

DynamoDB Streams and AWS Lambda provide a powerful mechanism for building real-time applications and automating workflows based on changes in your DynamoDB tables. By using these services together, you can create responsive, event-driven applications that react to data changes in near real-time.

Dynamodb TTL

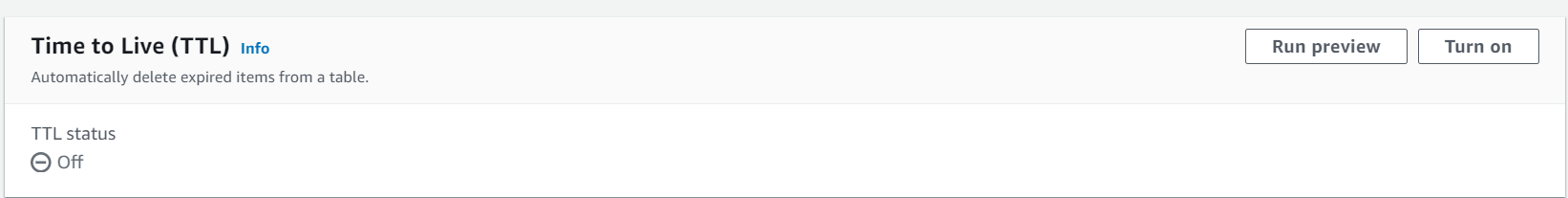

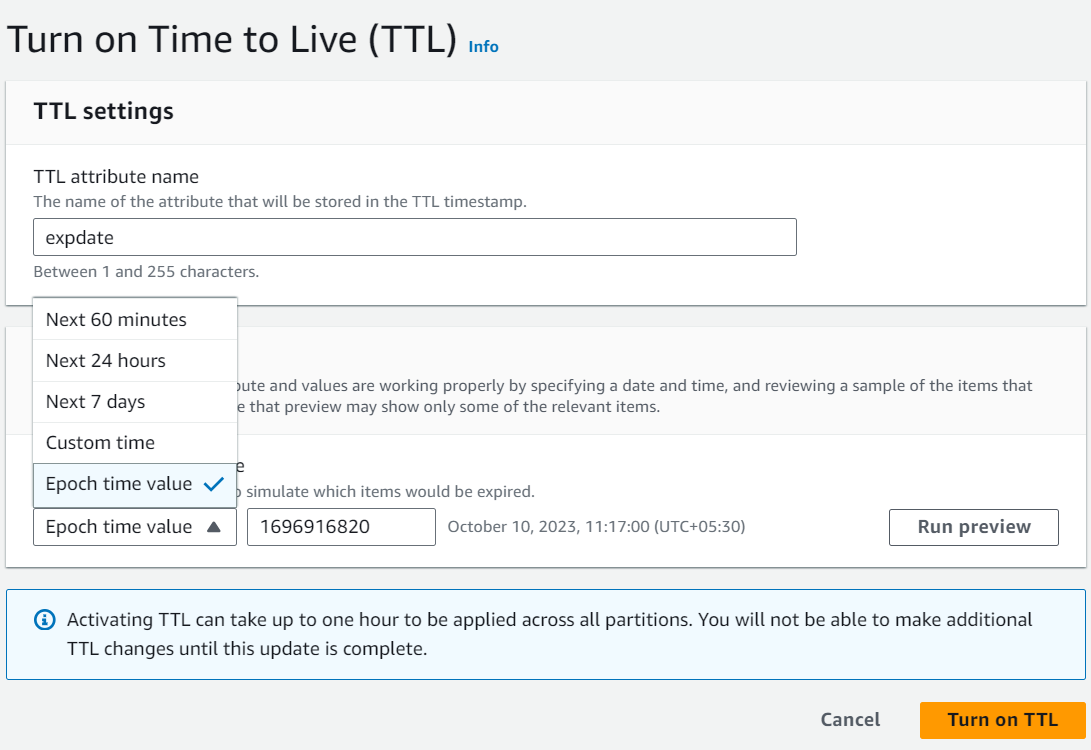

Time to Live (TTL) is a feature offered by various databases, including Amazon DynamoDB, that allows you to specify a time period after which a record or item will be automatically deleted from the database. TTL is a valuable tool for managing data that has a limited lifespan or for implementing data retention policies. In this explanation, I’ll focus on DynamoDB’s Time to Live feature in detail:

Key Concepts of Time to Live (TTL) in DynamoDB:

- Expiration Timestamp:

– Each item in a DynamoDB table can have an associated expiration timestamp, which is a Unix timestamp (expressed in seconds).

– The expiration timestamp indicates when the item should be automatically deleted from the table. - TTL Attribute:

– You define a TTL attribute in your DynamoDB table schema. This attribute is used to store the expiration timestamp for each item.

– The TTL attribute is indexed by DynamoDB, allowing efficient querying and deletion of expired items. - Item Deletion:

– DynamoDB periodically scans the TTL index to identify items with expired timestamps.

– When an item’s expiration timestamp is reached, DynamoDB automatically deletes the item from the table. - Automatic Cleanup:

– TTL-based item deletion is an automated and hands-free process. Once you enable TTL on a table, DynamoDB takes care of removing expired items without manual intervention.

How to Use TTL in DynamoDB:

- Enable TTL on a Table:

– To use TTL, you must enable it on the DynamoDB table where you want to manage expiration.

– You can enable TTL when creating a new table or modify an existing table to add TTL settings. - Define the TTL Attribute:

– Specify a TTL attribute in your table’s schema. This attribute should hold the expiration timestamps for each item.

– The TTL attribute must be of the Number data type. - Set TTL Values:

– When inserting or updating items in the table, set the value of the TTL attribute to the Unix timestamp when you want the item to expire.

– DynamoDB will automatically calculate when the item should be deleted based on this timestamp.

Use Cases for Time to Live (TTL) in DynamoDB:

- Session Management: Automatically expire user sessions after a certain period of inactivity.

- Cache Management: Use DynamoDB as a caching layer, and set TTL for cache entries to keep the cache fresh.

- Data Retention Policies: Implement data retention policies by setting TTL for logs or historical data.

- Temporary Data: Store temporary data that should be automatically cleaned up after a specific duration.

- Scheduled Tasks: Schedule the automatic removal of items that are no longer needed.

Considerations and Best Practices:

– TTL in DynamoDB is designed for data that has a predictable expiration time.

– Be aware of DynamoDB’s limitations on the TTL attribute’s data type (Number) and indexing.

– Ensure your system’s clock is synchronized with an accurate time source to prevent unexpected item deletions.

– Test TTL settings thoroughly to ensure items are expired and deleted as expected.

DynamoDB’s Time to Live (TTL) feature simplifies data management by automatically removing expired records, helping you keep your database clean and avoid unnecessary storage costs. It’s a valuable tool for scenarios where data has a known expiration time or when implementing data retention policies.

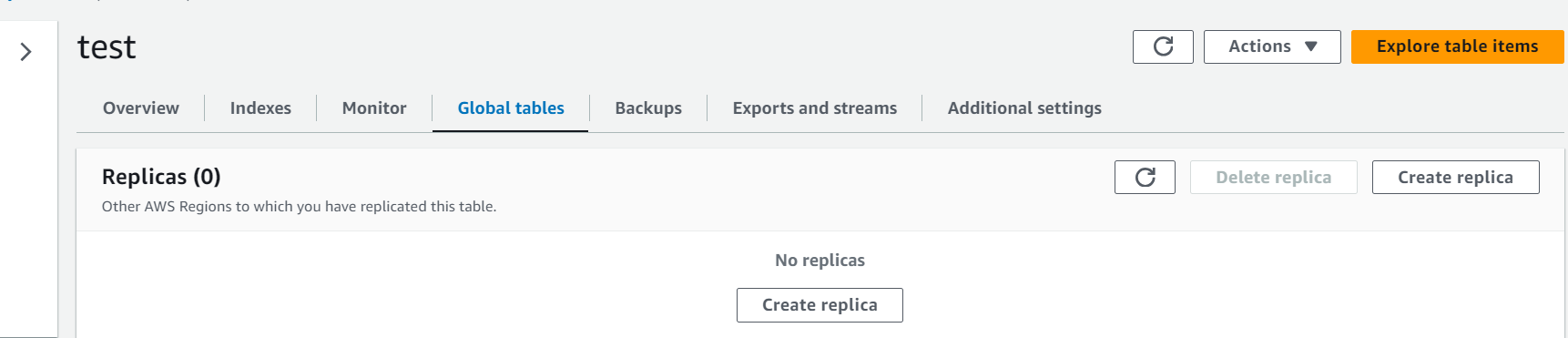

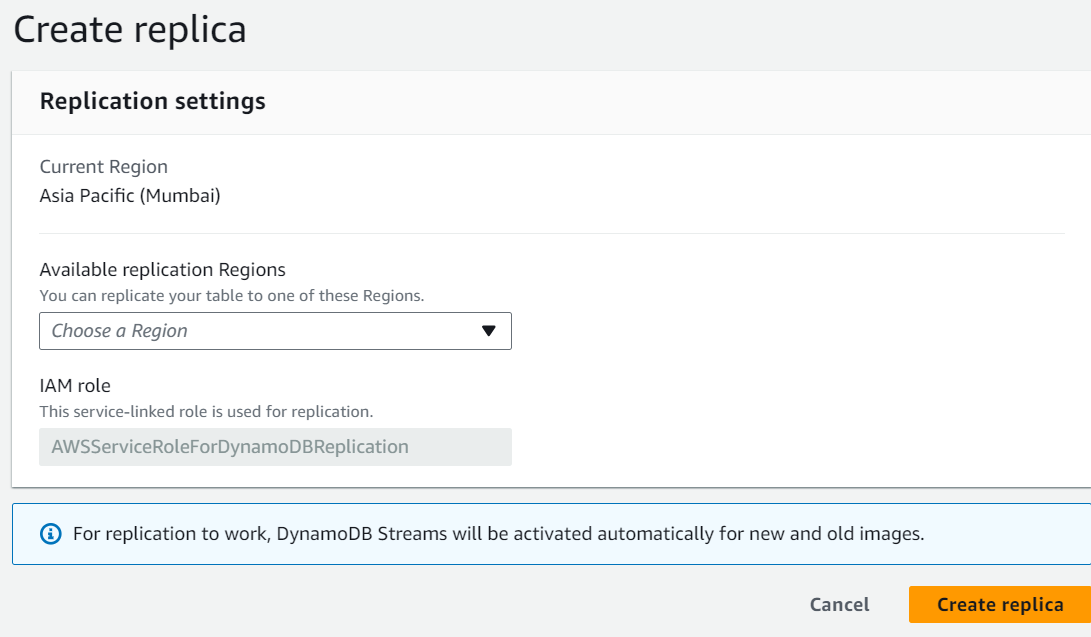

Global Tables

Amazon DynamoDB Global Tables is a fully managed, multi-region, and highly available database replication feature provided by AWS DynamoDB. It allows you to create and manage distributed databases that can provide fast and responsive access to data globally. In this detailed explanation, I’ll cover the key aspects and benefits of DynamoDB Global Tables:

Key Concepts of DynamoDB Global Tables:

- Multi-Region Replication:

– DynamoDB Global Tables enable you to replicate your DynamoDB tables across multiple AWS regions around the world.

– This replication provides disaster recovery, low-latency access, and global data distribution capabilities. - Active-Active Architecture:

– Global Tables support an active-active architecture, meaning that each replica (in different regions) can independently serve read and write requests.

– This architecture enables you to have read and write operations in multiple regions, providing low-latency access to data for users across the globe. - Conflict Resolution:

– DynamoDB Global Tables offer automatic conflict resolution in case of conflicting write operations to the same item in different regions.

– You can configure a custom conflict resolution policy if needed, or DynamoDB can automatically choose the “latest” version based on timestamps. - Consistency Model:

– Read consistency in DynamoDB Global Tables is eventual consistency by default. However, you can specify strong consistency for specific read operations. - Global Secondary Indexes (GSIs):

– GSIs are also replicated across regions in a Global Table, providing efficient querying capabilities on replicated data.

Benefits of DynamoDB Global Tables:

- High Availability and Disaster Recovery:

– Data is replicated across multiple regions, ensuring high availability and disaster recovery capabilities.

– In the event of a regional outage, your application can continue to operate using data from other available regions. - Low-Latency Access:

– Users located in different regions can access data from the nearest replica, reducing latency for read and write operations. - Global Data Distribution:

– DynamoDB Global Tables enable global data distribution, making it easier to provide a consistent experience for users worldwide. - Simplified Application Logic:

– Global Tables simplify your application’s logic because you can interact with a single table while benefiting from global data distribution. - Automatic Failover:

– Global Tables support automatic failover between regions. If the primary region becomes unavailable, the system automatically switches to a secondary region.

Considerations and Use Cases:

- Use Cases:

– Global customer-facing applications with a worldwide user base.

– Multi-region disaster recovery and business continuity requirements.

– Reducing latency for read and write operations by serving data from regions closer to users. - Write Costs:

– Be aware that replicating data across multiple regions may result in higher write costs due to write operations being replicated to all regions. - Conflict Resolution:

– Carefully plan your conflict resolution strategy, especially if you expect conflicts due to concurrent writes in different regions. - Region Selection:

– Choose AWS regions for replication based on your application’s geographical distribution and latency requirements.

Example Scenario:

Imagine you have a global e-commerce application using DynamoDB Global Tables. Users from different parts of the world can place orders, and the data is replicated across multiple regions. If one region experiences a network issue or an outage, users can still access their order history and continue shopping seamlessly using the data stored in other regions.

DynamoDB Global Tables provide a powerful solution for creating globally distributed and highly available applications with low-latency access to data. It simplifies the complexities of managing multi-region databases and enables you to focus on building scalable and resilient applications.

Mobile Application Back-end Services for Short News App Using AWS Cloud

An entertainment and news company needed a scalable and reliable backend for their Short News App, capable of handling user authentication, user data storage, media storage, push notifications, and other backend operations. After thorough consideration of different options, we chose Amazon Web Services (AWS) cloud as the ideal backend for their mobile application.